Why Designing Better AI Agents Means Rediscovering Object-Oriented Design

In the early days of my software career, I was deeply influenced by the work of Grady Booch, James Rumbaugh, and Ivar Jacobson—the pioneers whose ideas eventually formed the foundation of Unified Modeling Language (UML). Before UML, each of them had developed their own approach to software modeling:

- Rumbaugh had the Object Modeling Technique (OMT), emphasizing analysis and structure.

- Booch had the Booch Method, which focused on design and architecture with an emphasis on clarity and iteration.

- Jacobson contributed Object-Oriented Software Engineering (OOSE), grounded in use cases and the user’s perspective.

When these three approaches merged in the mid-90s to create UML, it felt like a moment of unification—a comprehensive way to think about systems as composed of cooperating objects, each with clearly defined roles, boundaries, and responsibilities. As a developer, I found these ideas powerful: they brought structure to complexity and offered a way to reason about systems at every level.

What I didn’t expect was that, decades later, those very same principles would feel not just useful, but essential again—this time, in a completely new domain: AI agent development.

Today’s autonomous agents may use natural language instead of method calls, and foundation models instead of compiled code, but the same problems apply: agents that try to do too much become unreliable, hard to test, and impossible to evolve. What we need are well-designed, focused, orchestrated units of work—just like we used to build with classes and interfaces.

In fact, many of the most successful agentic architectures today feel like a return to the foundational values of object-oriented design: clarity, separation of concerns, and modularity. And those ideas, shaped by Booch, Rumbaugh, and Jacobson over 30 years ago, may hold the key to building sustainable, scalable, and cost-effective AI systems now.

Let’s explore how those principles—single responsibility, decomposition, orchestration, and aggregation—can help us design better AI agents, avoid the pitfalls of monolithic prompting, and create a more pragmatic path through this new generation of software. This is especially crucial as we navigate the challenges posed by advanced AI systems, ensuring their alignment with human goals and mitigating the risks of undesirable behavior.

Introduction to AI Agents

A Familiar Challenge in a New Form

When we first started building web and mobile applications at scale, we quickly realized that piling too much functionality into a single class—or a single team—leads to brittle systems. Bloated controller files, massive service classes, and tangled business logic became the enemy of agility and maintainability.

With AI agents, we’re in danger of repeating that mistake.

It’s tempting to build monolithic agents: a single agent that can plan, search, summarize, calculate, and act—using a dozen tools and juggling various memory sources. But as with traditional software, piling all that responsibility into one place causes several issues:

- It’s harder to reason about what the agent is doing.

- Errors become opaque and harder to trace.

- You lose the ability to optimise components in isolation.

- Performance suffers, both in cost and latency.

- Efficiency in task management and productivity declines as the agent struggles to handle multiple tasks simultaneously without interference.

We’ve seen this movie before. The antidote, once again, is clear design. The same principles that helped us tame complexity in enterprise software can help us do the same in AI systems.

Key Features of AI Models

AI models, which underpin AI agents, possess several key features that enable them to tackle complex tasks. These features include advanced machine learning capabilities, natural language processing, and computer vision. AI models can identify patterns in data, make autonomous decisions, and complete tasks across a wide range of applications, such as medical diagnosis, workflow optimization, and infrastructure management. They can interact with external systems, other agents, and humans to achieve business objectives. Developing these AI models requires significant expertise in machine learning, software development, and data science. The process involves training on large datasets to enable the models to learn and improve over time, ensuring they can perform their designated tasks effectively.

Single Responsibility: Narrow Focus, Better Results

In object-oriented design, the Single Responsibility Principle (SRP) states that a class should have only one reason to change. That is, it should do one thing well.

This principle is extremely helpful in the AI space. An agent designed to complete a complex workflow—say, generating a marketing strategy—doesn’t have to do it all itself. It can delegate parts of the task to smaller, specialized agents.

For example:

- A Data Agent might retrieve and clean up market data.

- A Copywriting Agent might draft emails and taglines.

- A Budgeting Agent might assess costs and ROI.

Each agent can be tested, evaluated, and optimized independently. You can iterate quickly, choose different model backends depending on the task, and reduce memory pollution and token bloat that comes with generic planning agents trying to “know it all.”

You wouldn’t give one class the job of file management, user authentication, and invoice generation. So why ask one AI agent to do research, planning, writing, and spreadsheet analysis? Instead, provide specific instructions to guide each AI agent’s functions, ensuring they use available tools effectively and perform their specialized tasks efficiently.

Deconstruction: Break Problems Into Manageable Parts

Good software developers learn early that one of the most important skills isn’t solving a problem—it’s breaking it down.

AI agents need the same thing, especially when dealing with complex challenges.

Large tasks should be deconstructed into subtasks, either programmatically or via prompting. This works in two ways:

- Static decomposition: Build a system of smaller agents that each handle a well-defined type of task.

- Dynamic decomposition: Use a planner agent that breaks tasks into steps and assigns them to other agents as needed.

This mirrors the way we used to design object hierarchies or use interfaces and polymorphism: identify clear responsibilities and isolate them in small, reusable units.

In the context of generative AI, this also improves control. A single prompt that tries to solve a broad task is unpredictable. Smaller, focused prompts (especially with well-defined output formats) are more deterministic and easier to validate.

The result is not just better design—it’s better AI output.

Orchestration: The Conductor, Not the Performer

One of the key patterns in OOP is separation between business logic and control flow. You often find classes or services that orchestrate the work of others—without doing the work themselves.

In agent architecture, this role is critical.

An Orchestrator Agent (or a controller, or planner) shouldn’t try to do everything. Instead, it should:

- Determine what needs to be done.

- Choose the right sub-agent(s) for the job.

- Collate, validate, or merge the results.

Think of it like a conductor directing a symphony. The conductor doesn’t play the instruments—they ensure that the right instrument plays at the right time.

This also lets you inject strategy into your agents. For example:

- If a task is simple, maybe it can be done by a fast, cheap model.

- If it’s ambiguous, maybe you want a human-in-the-loop.

- If a sub-agent fails, maybe you try another.

This orchestration layer allows you to apply logic, safeguards, or optimizations without changing your actual working agents—just like middleware in an MVC framework.

In the context of AI, this orchestration is a crucial part of ai research. Collaboration in this research area is essential to ensure AI safety and ethical alignment, especially as systems grow more autonomous. Various interdisciplinary efforts are focused on addressing these challenges and preventing harmful consequences.

Aggregation: Powerful Composition Without Inheritance Hell

In classic OOP, composition is often preferred over inheritance. That means instead of building huge hierarchies of subclasses, we combine smaller components to create new functionality.

This maps very well to AI agents, offering many benefits.

Rather than building one agent that knows how to do a dozen things, build multiple small agents that can be combined when needed:

- A Summarizer Agent could be used by both a Research Agent and a Meeting Notes Agent.

- A Translation Agent could be injected wherever multilingual content is needed.

- A Data Validation Agent could be used in many workflows for checking output before it’s saved or sent.

By designing with aggregation in mind, you avoid tight coupling. You can substitute components more easily (e.g., swap GPT-4 for Claude or Gemini), and you open the door to dynamic tool selection based on runtime needs. This approach also aligns with the many benefits of Standard Operating Procedures (SOPs), which minimize miscommunication and enhance company value.

This reduces costs and increases performance because you’re using only what you need, when you need it.

Small, Targeted Agents Unlock Better Model Choices

Another major benefit of designing composable, single-purpose agents is that you can pick the right model for the job. AI-powered agents have a transformative impact across various industries, enhancing customer service, content creation, automating coding tasks, and analyzing medical data. These agents improve efficiency, decision-making, and overall productivity.

Not all tasks need GPT-4 or a fine-tuned domain-specific model. In fact, using a large, general-purpose model for everything is inefficient.

With smaller agents, you can:

- Use faster models (e.g., Claude Haiku or GPT-3.5) for simple lookups or formatting tasks.

- Use open-source models hosted in-house for cost-sensitive operations.

- Apply task-specific fine-tuning where the value justifies it.

- Route tasks based on data sensitivity, latency requirements, or jurisdiction.

For example, a summarisation task might work well on a cheaper model, while a negotiation or legal drafting task could justify the cost of a more sophisticated model.

This is only possible if your system is modular. If your agent is a blob of prompt logic, switching models becomes a risky, high-effort task. But if your summarizer is an isolated component, you can swap the engine behind it easily.

The economic benefits are real: better latency, lower cost, and less wasted compute.

Applications of AI Agents

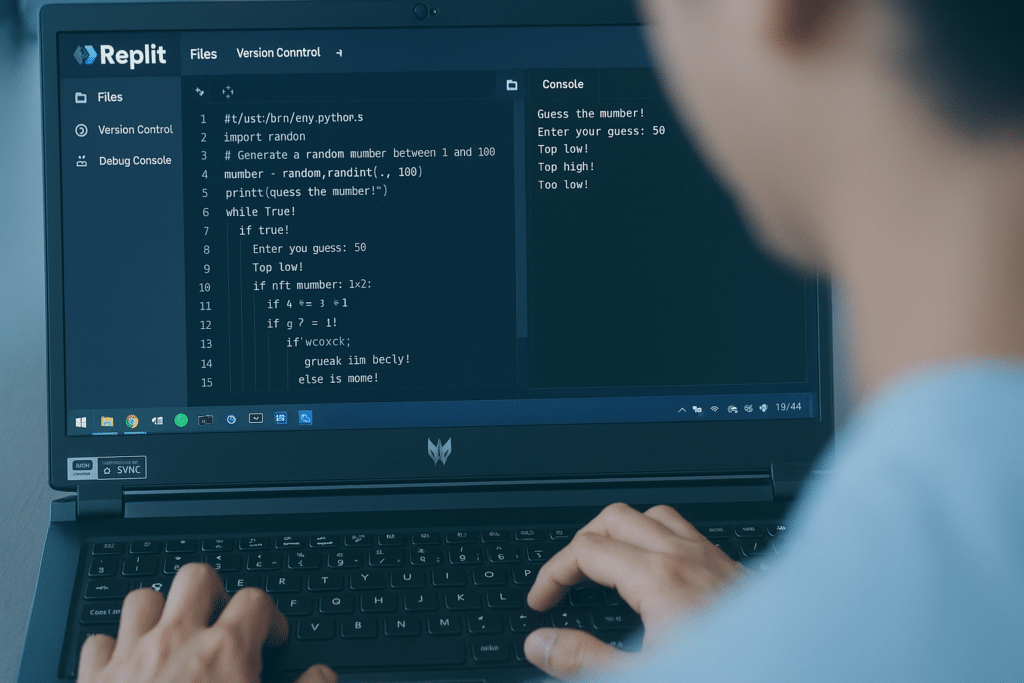

AI agents offer numerous benefits and can be applied across various industries, including customer service, employee productivity, creative tasks, data analysis, software development, and security. They can automate routine tasks, provide personalized customer experiences, and enhance self-service capabilities. In creative fields, AI agents can generate content, images, and ideas, assisting with design, writing, personalization, and campaigns. For data scientists, AI agents can help uncover and act on meaningful insights from data, ensuring the factual integrity of their results. In software development, AI agents can accelerate the process through AI-enabled code generation and coding assistance, leading to faster deployment and cleaner, clearer code. However, developing and deploying AI agents can be computationally expensive and requires adherence to best practices, including ensuring data quality, model interpretability, and establishing effective feedback loop mechanisms.

Reducing Key-Person Risk and Supporting Your Team

Agentic systems often get built in the style of the person who started them. Without clear patterns and modularity, knowledge becomes siloed and changes become risky. Implementing a standard operating procedure (SOP) for structuring processes ensures consistency and clarity, reducing these risks.

This is where OOP-style design helps again.

Smaller agents with well-defined interfaces are easier to:

- Document

- Test

- Understand

- Refactor

- Hand over

This helps onboard new developers and reduces the key-person dependency that plagues early AI projects.

It also means that non-technical team members (analysts, designers, product managers) can participate more easily. They don’t need to understand a 200-line planning prompt—they can review the output of a single summarizer or feedback generator and give targeted feedback.

By deconstructing logic into smaller blocks, you increase observability and accountability—which are critical for safe, scalable AI deployment.

Better Prompt Engineering Starts with Clear Responsibilities

Even your prompt design benefits from applying object-oriented thinking.

A monolithic prompt with five responsibilities is hard to optimize. Every change affects everything. You can’t easily evaluate its performance or debug its failure cases.

But if you isolate functions—“Summarise this”, “Extract these fields”, “Generate a polite rejection email”—then each one can be refined independently.

Language models can significantly improve AI capabilities when these functions are isolated. However, it is crucial to address ethical considerations and implement safety measures to prevent misuse and ensure responsible deployment.

You can A/B test different templates, add few-shot examples, control output format, or try chain-of-thought reasoning just for one step.

This leads to more deterministic, reusable, and auditable systems.

Versioning, Testing, and Maintenance: Easier with Modularity

One of the unsung benefits of OOP is that it makes versioning and testing easier.

The same is true for AI agents and machine learning models. However, machine learning models come with potential vulnerabilities, such as trojans and backdoors, that malicious actors can exploit. Ensuring the security and alignment of these models is crucial. Understanding their inner workings can help identify and mitigate these risks.

When your agents are modular:

- You can test them independently.

- You can version them independently.

- You can cache expensive operations.

- You can log or trace behaviour with minimal overhead.

This enables proper CI/CD pipelines for agent-based systems. You can deploy changes safely, roll back broken logic, and continuously improve performance.

In contrast, tightly-coupled monolithic agents are much harder to evolve. Testing them often requires recreating entire prompt chains, datasets, or tool setups.

So again: small is beautiful.

Avoiding Complex Patterns: Keep It Practical

One important caveat: this isn’t about bringing back the full Gang of Four design pattern catalogue. You don’t need abstract factories or visitor patterns to build AI agents.

In fact, overcomplicating the architecture is a fast path to failure. Many AI workloads are probabilistic and context-dependent—so you want lightweight, pragmatic design, not theoretical elegance. When developing AI technology, it is crucial to address the broader implications and risks associated with AI development. AI safety is a crucial area of research that addresses the ethical, societal, and technical challenges posed by advanced AI models, highlighting the need for robust methods to ensure that such technologies are applied beneficially and safely.

Stick to the basics:

- Single responsibility

- Composition over inheritance

- Explicit orchestration

- Clear separation of concerns

These were always the best parts of object-oriented design—and they’re more than enough for building scalable, maintainable agent systems.

The Future Is Agent-Oriented, But the Principles Are Old

As we move from single-prompt applications to autonomous, multi-tool, multi-agent workflows, we’re entering a new era of software engineering. AI applications, particularly those leveraging enterprise data, are enhancing efficiency across various industries.

But we don’t need to invent a new design philosophy from scratch.

Classic software design still has plenty to teach us—especially when it comes to clarity, structure, and decomposition. Object-oriented thinking, with its emphasis on modularity, encapsulation, and responsibility, offers a clear path forward.

By designing composable, orchestrated, small-purpose agents, we:

- Improve performance and latency

- Reduce compute and model costs

- Gain flexibility in model and tool choice

- Enhance reliability and testability

- Empower more team members to contribute

- Future-proof our systems against model changes or API decay

In short: we build systems that scale—not just technically, but organizationally.

As AI continues to evolve, it’s worth remembering that some of the most valuable ideas are the ones we already know.

It turns out that the best way to build next-generation agents… might be to think like a 1990s software engineer, after all.

Talk Think Do is a Microsoft Solutions Partner, Learnosity Partner, and certified CCS supplier. We support businesses with cloud application development, DevOps implementation, and custom generative AI integration using Microsoft Azure OpenAI services. If you’re interested in integrating a custom AI solution today, book a free consultation to speak to a member of the team.

Get access to our monthly

roundup of news and insights

You can unsubscribe from these communications at any time. For more information on how to unsubscribe, our privacy practices, and how we are committed to protecting and respecting your privacy, please review our Privacy Policy.

See our Latest Insights

Implementing RAG AI Search on On-Premise Files with our AI Search Accelerator

As demand for AI‑powered tools like Microsoft Copilot grows, many organisations are asking the same question: “How can we harness the power of generative AI without moving our sensitive data to the cloud?” In this guide, we’ll explain why Retrieval‑Augmented Generation (RAG) is so effective for on‑premise data and walk through a practical approach using…

Using AI to Strengthen ISO 27001 Compliance

Preparing for our ISO 27001:2022 recertification, and a transition from the 2013 standard, was no small task. As a custom software company handling sensitive client data, we hold ourselves to high standards around security and compliance. But this year, we approached the challenge differently. We built and deployed a custom AI Copilot agent to help…

Who Owns AI-Written Code? What CTOs, Developers, and Procurement Teams Need to Know

Generative AI is transforming how software is written. Tools like GitHub Copilot, Claude, Cursor, and OpenAI Codex are now capable of suggesting full functions, refactoring legacy modules, and scaffolding new features, in seconds. But as this machine-authored code finds its way into production, a critical question arises:Who owns it and who’s responsible if something goes…

Legacy systems are costing your business growth.

Get your free guide to adopting cloud software to drive business growth.